Embeddings (opens new window) are numerical representations of data, capturing the semantic essence of words or phrases. These embeddings are encoded as high-dimensional vectors, allowing for efficient processing in various data applications. Embeddings can vary depending on the models used. The same text may have a different embedding if generated using different models. While text data is the primary focus, embeddings are not limited to textual information; they can also be applied to images, graphs, and other data types.

Embeddings are even more valuable in the context of vector databases (opens new window); we can apply basic similarity/distance measures on them to check how similar or dissimilar a pair of text (or other data) is, we can also use them to find the most relatable samples and so on. Their use is of such fundamental importance without requiring much background.

In this blog, we'll explore how to generate and use embeddings with popular models, such as OpenAI, Jina, and Bedrock. MyScale's EmbedText() function provides a streamlined approach to working with embeddings, especially when dealing with large-scale data. By leveraging this tool, you can seamlessly integrate embedding-based search and other text-processing capabilities into your applications, all while benefiting from MyScale's performance and cost-effectiveness.

# Embedding Models

Traditionally, we have classical embedding models like Word2Vec (opens new window), GloVe (opens new window) and LSA (opens new window). Later on, they were gradually replaced by RNN/LSTM-based models (opens new window). The unparalleled capabilities of transformers brought another paradigm shift as now transformers are the de facto models for embeddings. To generate embeddings, we can employ some ways:

- Train a model: If you have good computational resources or maybe there is an issue of data privacy, etc. training it locally would be a good option. Here, training doesn’t necessarily mean training a model from scratch but includes fine-tuning as well (as we do most of the time). Once trained, we can use this model to generate embeddings.

- Use a pre-trained model: Usually, we don’t need to train or even fine-tune a model for embeddings. There is a set of standard models which are used for embedding generation in most of the cases, like GPT-series, some SLMs like Phi-series, Titan, Llama and Falcon, etc.

With pre-trained models, we have some options. HuggingFace models are an excellent option if you prefer to run them locally (inference doesn’t require GPUs necessarily, so just a normal laptop will do). On the other hand, we have a lot of cloud-based services for these embedding models, too. OpenAI, Cohere and Jina are some good examples. Another reason why these services are useful is the unavailability of some state-of-the-art models (like the GPT-4 family).

# Using Models on HuggingFace

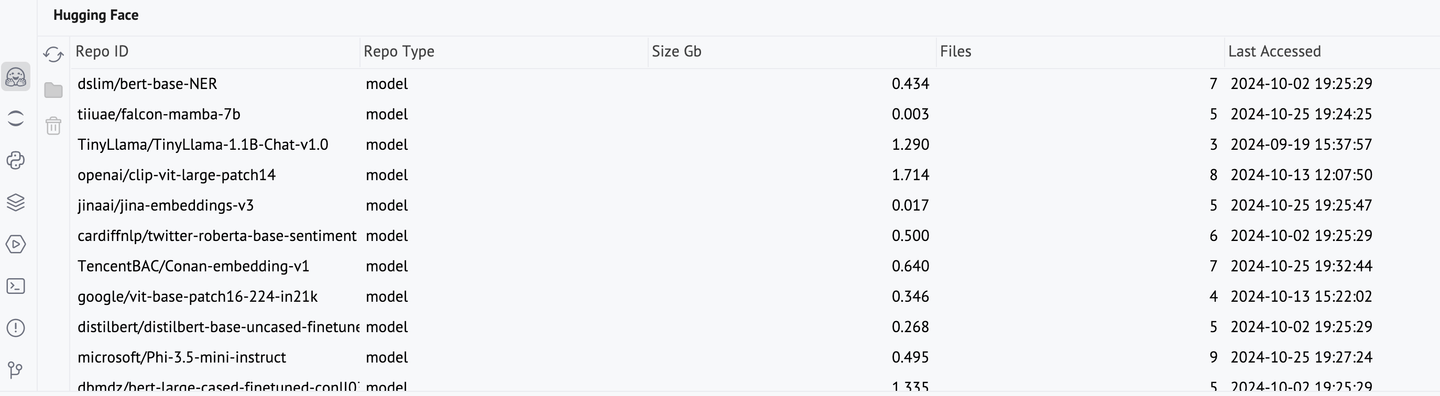

A number of transformer models are hosted on Hugging Face (opens new window), including the ones used for embeddings specifically. To use a Hugging Face model, we need to call the respective model and tokenizer. These models may take some time to download. Once downloaded, they remain in the cache and can be managed using PyCharm’s Hugging Face console.

from transformers import AutoModel, AutoTokenizer

modelName = "TencentBAC/Conan-embedding-v1"

tokenizer = AutoTokenizer.from_pretrained(modelName)

model = AutoModel.from_pretrained(modelName)

text = "All human beings are born free and equal in dignity and rights. They are endowed with reason and conscience and should act towards one another in a spirit of brotherhood."

inputs = tokenizer(text, return_tensors="pt")

tokenEmbeddings = model(**inputs).last_hidden_state

It would be useful to convert these token embeddings into sentence embeddings by pooling them.

sentenceEmbedding = tokenEmbeddings.mean(dim=1)

sentenceEmbedding[0]

Using Hugging Face for embeddings is pretty good, but it can be troublesome in some cases, like:

- Limited resources: Usually HF models don’t take much space (on average 1 GB), but if you are low on disk or memory, probably not a feasible option.

- Unavailability of some models: While a lot of models are hosted on Hugging Face, there are still some models which are hosted on the dedicated services (GPT-4 quickly comes to mind, but there are some others too).

To use these models remotely, we use different services like OpenAI, Amazon Bedrock, Cohere and so on. We can use them in conjunction with MyScale, as we will see.

# Fetching Embeddings with MyScale

As we’ve discussed, embeddings are central to vector database applications. MyScale provides the ability, the EmbedText function, to calculate embeddings (text only so far) using a variety services, including OpenAI, Cohere, Jina, etc. This integration is quite helpful as it streamlining the conversion of text into vectors. It supports automatic batching for high throughput, and it is useful for both real-time search and batch processing. This function takes some essential parameters:

text: The text string whose embedding we desire to calculate. Like,'Thou canst not then be false to any man.'.provider: Name of the embedding model provider (8 supported so far; case-insensitive). For example,OpenAI.api_key: API key for the respective provider account. It is provided directly by the service and has nothing to do with MyScale.

Note: All these embedding services charge based on token usage, so it's crucial to avoid repeatedly calculating the same embeddings. Instead, once generated, the embeddings should be saved in a database for efficient reuse.

In addition to these parameters, we also have base_url as an optional parameter (not required for most of the providers).

# Using OpenAI Models

The following code snippet shows how to use OpenAI embeddings with EmbedText() :

SELECT EmbedText('<input-text>', 'openAI', '', '<Access Key>', '{"model":"text-embedding-3-small", "batch_size":"50"}')

Here we also have the optional arguments of API URL, output vector dimensions and user ID. OpenAI provides 3 embedding models, Ada-002 and Embedding-3 (small and large). As you can see, the large version produces a 3072-length embedding vector which has a better resolution and can be quite useful when dealing with larger documents.

| Model | Description | Output Dimension |

|---|---|---|

text-embedding-3-large | Most capable embedding model for both English and non-English tasks | 3,072 |

text-embedding-3-small | Increased performance over 2nd generation Ada embedding model | 1,536 |

text-embedding-ada-002 | Most capable 2nd generation embedding model, replacing 16 first generation models | 1,536 |

To show the powers of even its smaller models, let’s take a big chunk of text (a whole chapter) and generate its embeddings.

service_provider = 'OpenAI'

textInput = 'We will leave Danglars struggling with the demon of hatred, and\\r\\nendeavoring to insinuate in the ear of the shipowner some evil...' #truncated here deliberately

parameters = {'sampleString': textInput, 'serviceProvider': service_provider}

x = client.query("""

SELECT EmbedText({sampleString:String}, {serviceProvider:String}, '', 'sk-xxxxxxxx', '{"model":"text-embedding-3-small", "batch_size":"50"}')

""", parameters=parameters)

print(x.result_rows[0][0])

Note: EmbedText() can take a text up to 5600 characters long.

Here we are taking result_rows[0][0] as using result_rows directly brings an unnecessary nesting of list and tuple.

# Using Bedrock Models

Amazon Bedrock gives users access to a number of foundation models. Before using any model, you need to get its access first (its pretty straightforward). Then, we can call it directly from the MyScale as:

SELECT EmbedText('<input-text>', 'Bedrock', '', '<SECRET_ACCESS_KEY>', '{"model":"amazon.titan-embed-text-v1", "region_name":"us-east-1", "access_key_id":"ACCESS_KEY_ID"}')

The following example will illustrate it further.

client.command("""

CREATE TABLE IF NOT EXISTS EmbeddingsCollection (

id UUID,

sentences String, -- Text field to store your text data

embeddings Array(Float32),

--CONSTRAINT check_data_length CHECK length(embeddings) = 1536

) ENGINE = MergeTree()

ORDER BY id;

""")

EmbeddingsCollection will soon be helpful as we will save the generated embeddings there. We will take some random text samples and calculate their embeddings.

service_provider = 'Bedrock'

embeddingModel = 'amazon.titan-embed-text-v1'

embeddingsList = []

for i in range(500, 1000, 30):

randomLine = hamletLines[i]

parameters = {'sampleString': randomLine, 'serviceProvider': service_provider, 'model': embeddingModel}

if len(randomLine) < 30: #Ignoring too little passages.

continue

else:

x = client.query("""

SELECT EmbedText({sampleString:String}, {serviceProvider:String}, '', '12PXA8xxxxxxxxxxx', '{"model":"amazon.titan-embed-text-v1", "region_name":"us-east-1", "access_key_id":"AKIxxxxxxxx"}')

""", parameters=parameters)

_id = client.query("""SELECT generateUUIDv4()

""")

embeddingsList.append((_id.result_rows[0][0], randomLine, x.result_rows[0][0]))

As you can see, while appending, we are taking the UUIDs the same way we are extracting embedding vectors (UUIDs are single objects). Now this embeddingsList can be inserted into the table and further used for a number of tasks like similarity search, etc.

# Using Jina Models

Jina is a relatively newer service. To use a Jina service, we can specify the input text, access key and model. The default model is jina-embeddings-v2-base-en in MyScale and it returns 768-length embeddings, which are lower in resolution compared to the other models.

SELECT EmbedText('<input-text>', 'jina', '', '<access key>', '{"model":"jina-embeddings-v2-base-en"}')

Here is an example. As you can see, it is almost the same as we did using other providers. EmbedText() allows us to encapsulate the providers and it feels like we are using more or less the same service.

service_provider = 'jina'

textInput = "The Astronomer's Telegram (ATel) is an internet-based short-notice publication service for quickly disseminating information on new astronomical observations.[1][2] Examples include gamma-ray bursts,[3][4] gravitational microlensing, supernovae, novae, or X-ray transients, but there are no restrictions on content matter. Telegrams are available instantly on the service's website, and distributed to subscribers via email digest within 24 hours' #truncated here deliberately"

parameters = {'sampleString': textInput, 'serviceProvider': service_provider}

x = client.query("""

SELECT EmbedText({sampleString:String}, {serviceProvider:String}, '', 'jina_faxxxxxxx', '{"model":"jina-embeddings-v2-base-en"}')

""", parameters=parameters)

x.result_rows[0][0]

# Processing Embeddings with MyScale

We have known how to get these embeddings with EmbedText(),let's see how to use them now. Since generating these embeddings takes some cost, we must save them in the database right after generation. It is quite straightforward with MyScale. Make a table and insert embeddings there.

client.command("""

CREATE TABLE IF NOT EXISTS EmbeddingsCollection (

id UUID,

sentences String, -- Text field to store your text data

embeddings Array(Float32),

--CONSTRAINT check_data_length CHECK length(embeddings) = 1536

) ENGINE = MergeTree()

ORDER BY id;

""")

As we already have the dataframe in the format of ID with input text and respective embeddings. We can simply call itertuples() and insert it using the respective method.

df_records = list(df.itertuples(index=False, name=None))

client.insert("EmbeddingsCollection", df_records, column_names=["id", "sentences", "embeddings"])

Now having data in the table, we can add the vector index. This index will soon be useful in similarity search.

client.command("""

ALTER TABLE EmbeddingsCollection

ADD VECTOR INDEX cosine_idx embeddings

TYPE MSTG

('metric_type=Cosine')

""")

# Efficiency

Having done the main work, it is pretty easy to see why embeddings are so useful and how efficient MyScale is. For example, after storing the embeddings for the whole book, we can perform some searches.

service_provider = 'jina'

queryString = "

parameters = {'sampleString': queryString, 'serviceProvider': service_provider}

x = client.query("""

SELECT EmbedText({sampleString:String}, {serviceProvider:String}, '', 'jina_faxxxxxxx', '{"model":"jina-embeddings-v2-base-en"}')

""", parameters=parameters)

queryEmbeddings = x.result_rows[0][0]

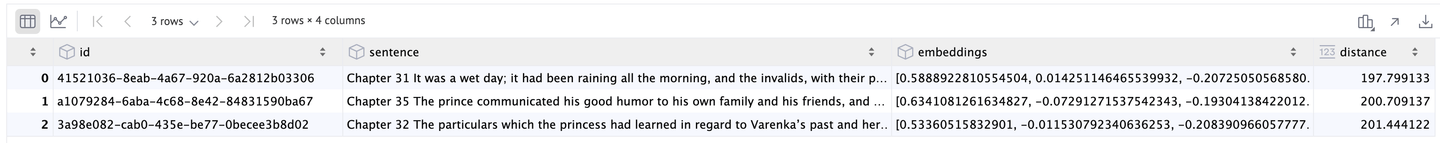

results = client.query(f"""

SELECT id, sentences, embeddings,

distance(embeddings, {queryEmbeddings}) as dist

FROM BookEmbeddings

ORDER BY dist LIMIT 3

""")

df = pd.DataFrame(results.result_rows)

As a result, we get the top 3 results:

With these significant benefits—seamless integration of embedding models, impressive performance, and cost savings—MyScale proves to be a powerful platform for any data-driven application.

# Conclusion

By utilizing MyScale's EmbedText() function, we've demonstrated how seamlessly you can integrate popular embedding models like OpenAI, Bedrock, and Jina into your applications. Generating and processing embeddings directly within MyScale not only simplifies your workflow but also delivers impressive performance—even when handling large-scale data.

For example, performing a similarity search through an 800+ page book in just a couple of seconds showcases MyScale's efficiency and speed. Such rapid processing would be challenging with traditional NLP models. Moreover, MyScale's capabilities aren't limited to text; it can handle embeddings for images and other data types, opening up a multitude of application possibilities.

What sets MyScale apart is not just its performance but also its cost-effectiveness. Unlike other vector databases, MyScale offers exceptional flexibility and features without the hefty price tag. Even when scaling up to manage 80 million vectors, the costs remain remarkably low—with 8 pods running at less than $0.10 per hour each.

This affordability makes MyScale an ideal choice for both startups and established businesses without breaking the bank. In essence, MyScale empowers you to harness the full potential of embeddings, providing a powerful, scalable, and economical solution for modern data applications.