On November 6, 2023, OpenAI announced the release of their GPTs. On this no-code platform, as a professional (or hobbyist) developer, you can build customized GPTs or chatbots using your tools and prompts, effectively changing your interactions with OpenAI's GPT. Previous interactions mandated using dynamic prompting to retrieve responses from GPT with LangChain (opens new window) or LlamaIndex (opens new window). Now the OpenAI GPTs handle your dynamic prompting by calling external APIs or tools.

This also changes how we (at MyScale) build RAG systems, from building prompts with server-side contexts to injecting these contexts into the GPTs model.

MyScale simplifies how you inject contexts into your GPTs. For instance, OpenAI's method is to upload files to the GPTs platform via a Web UI. Juxtapositionally, MyScale allows you to mix structured data filtering and semantic search using a SQL WHERE clause (opens new window), process and store a much larger knowledge base at a lower cost, as well as share one knowledge base across multiple GPTs.

Try out MyScaleGPT now 🚀 on GPT Store, or integrate MyScale’s open knowledge base with your app today with our API hosted on Hugging Face:

# BYOK: Bring Your Own Knowledge

GPT has evolved considerably during the past year, and it knows much more in the shared knowledge domain than it did when it was first released. However, there are still specific topics it knows nothing about or is uncertain about—like domain-specific knowledge and current events. Therefore, as described in our earlier articles (opens new window), integrating an external knowledge base—stored in MyScale—into GPT is mandatory, boosting its truthfulness and helpfulness.

We brought an LLM into our chain (or stack) when we were building RAG with MyScale (opens new window). This time, we need to bring a MyScale database to the GPTs platform. Unfortunately, it is not currently possible to directly establish connections between GPTs and MyScale. So, we tweaked the query interface, exposing it as a REST API.

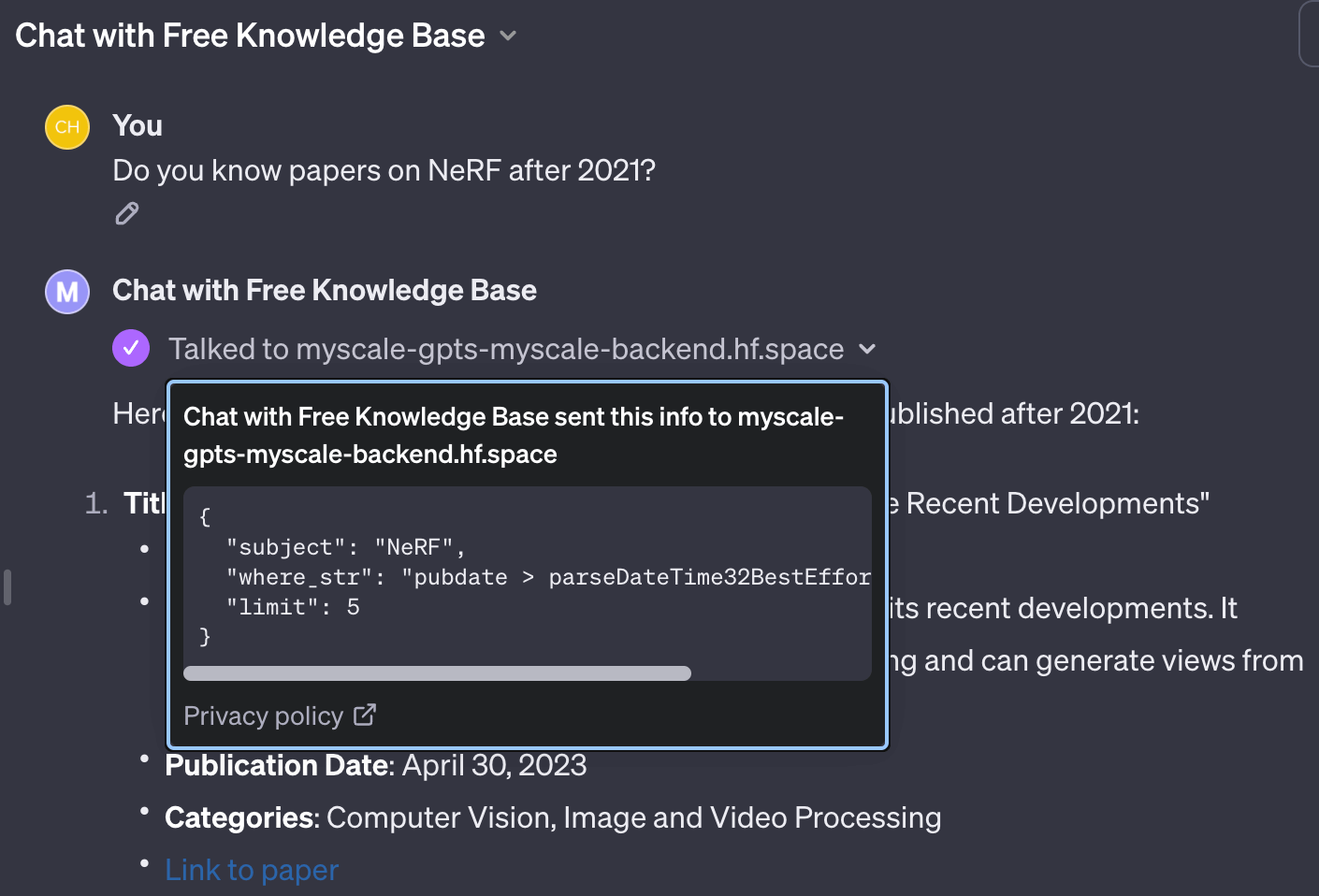

Due to our earlier success with OpenAI function call (opens new window), we can now design a similar interface where GPT can write vector search queries with SQL-like filter strings. The parameters are written in OpenAPI (opens new window) as follows:

"parameters": [

{

"name": "subject",

"in": "query",

"description": "A sentence or phrase describes the subject you want to query.",

"required": true,

"schema": {

"type": "string"

}

},

{

"name": "where_str",

"in": "query",

"description": "a SQL-like where string to build filter",

"required": true,

"schema": {

"type": "string"

}

},

{

"name": "limit",

"in": "query",

"description": "desired number of retrieved documents",

"schema": {

"type": "integer",

"default": 4

}

}

]

With such an interface, GPT can extract keywords to describe the desired query with filters written in SQL.

# Providing Query Entries to Different Tables

We may sometimes have to query different tables. This can be implemented using separate API entries. Each API entry holds its own schema and prompts under its documentation. GPTs will read the applicable API documentation and write the correct queries to the corresponding table.

Notably, the methods we introduced before, like self-querying retrievers (opens new window) and vector SQL (opens new window), require dynamic or semi-dynamic prompting to describe the table structure. Instead, GPTs function like conversational agents in LangChain (opens new window), where agents use different tools to query tables.

For instance, the API entries can be written in OpenAPI 3.0 as follows:

"paths": {

// query entry to arxiv table

"/get_related_arxiv": {

"get": {

// descriptions will be injected into the tool prompt

// so that GPT will know how and when to use this query tool

"description": "Get some related papers."

"You should use schema here:\n"

"CREATE TABLE ArXiv ("

" `id` String,"

" `abstract` String,"

" `pubdate` DateTime,"

" `title` String,"

" `categories` Array(String), -- arxiv category"

" `authors` Array(String),"

" `comment` String,"

"ORDER BY id",

"operationId": "get_related_arxiv",

"parameters": [

// parameters mentioned above

],

}

},

// query entry to wiki table

"/get_related_wiki": {

"get": {

"description": "Get some related wiki pages. "

"You should use schema here:\n\n"

"CREATE TABLE Wikipedia ("

" `id` String,"

" `text` String,"

" `title` String,"

" `view` Float32,"

" `url` String, -- URL to this wiki page"

"ORDER BY id\n"

"You should avoid using LIKE on long text columns.",

"operationId": "get_related_wiki",

"parameters": [

// parameters mentioned above

]

}

}

}

Based on this code snippet, GPT knows there are two knowledge bases that can help answer the user’s questions.

After configuring the GPT Actions for knowledge base retrieval, we simply fill the Instructions and tell the GPT how to query the knowledge bases and then answer the user question:

Do your best to answer the questions. Feel free to use any tools available to look up relevant information. Please keep all details in query when calling search functions. When querying using MyScale knowledge bases, for array of strings, please use

has(column, value to match). For publication date, useparseDateTime32BestEffort()to convert timestamps values from string format into date-time objects, you NEVER convert the date-time typed columns with this function. You should always add reference links to documents you used.

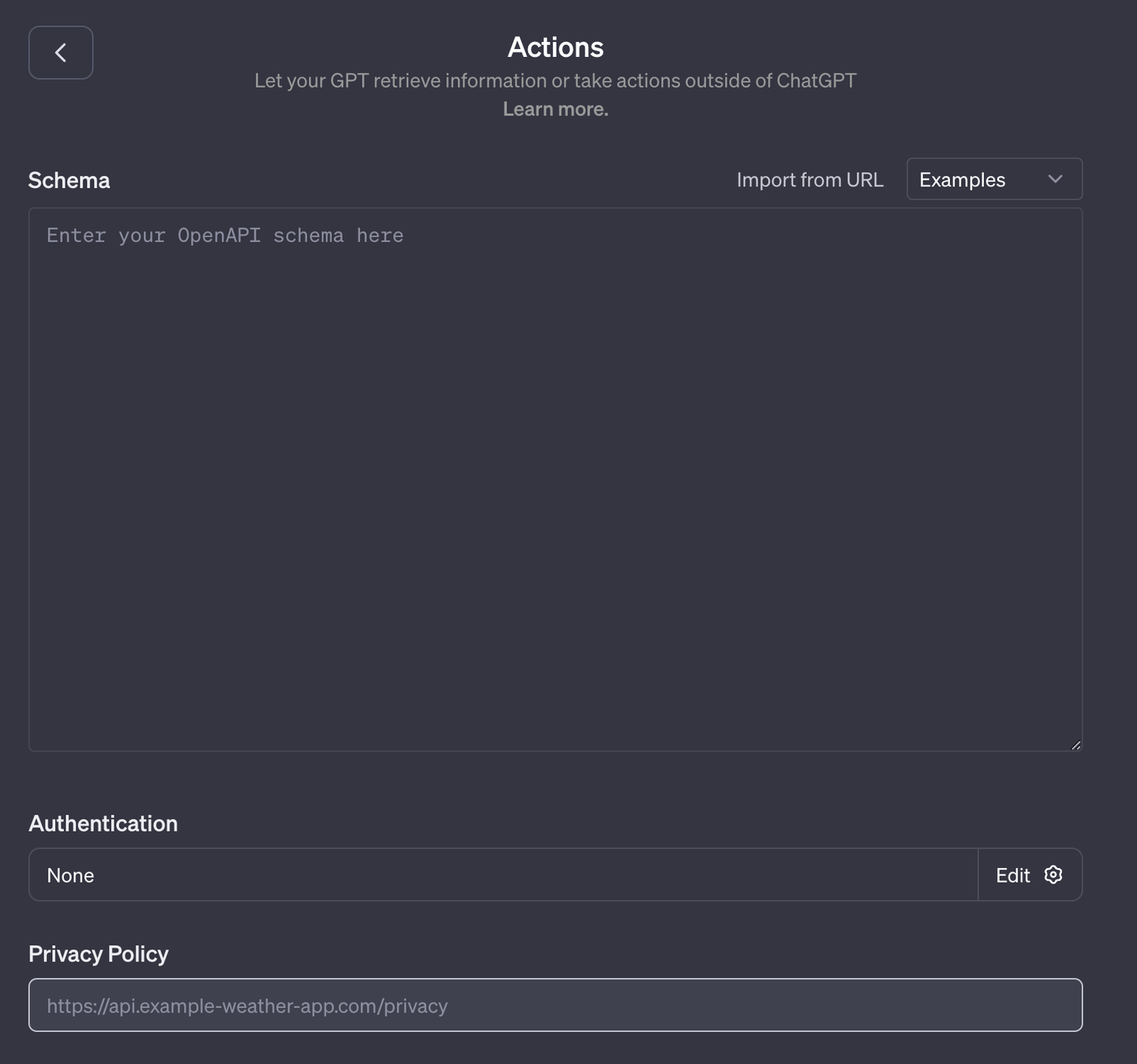

# Hosting Your Database as OpenAPI

GPTs adapt APIs under the OpenAI 3.0 standard. Some applications, like databases, do not have OpenAPI interfaces. So, we need to use middleware to integrate GPTs with MyScale.

We have hosted our database with OpenAI-compatible interfaces on Hugging Face (opens new window). We used flask-restx (opens new window) to simplify and automate the implementation so the code is small, clean, and easy to read: app.py (opens new window), funcs.py (opens new window).

The good thing about this is both the prompts and the functions are bound together. Therefore, you don’t need to overthink the combination of prompting, functionality, and extendibility; write it in a human-readable format, and that’s it. The GPT will read this documentation from a dumped OpenAI JSON file.

Note:

flask-restx only generates APIs in a Swagger 2.0 format. You must convert them into an OpenAPI 3.0 format with Swagger Editor (opens new window) first. You can use our JSON API on Hugging Face (opens new window) as a reference.

# GPT Running with Contexts from an API

With proper instructions, the GPT will use special functions to handle different data types carefully. Examples of these data types include ClickHouse SQL functions like has(column, value) for array columns and parseDateTime32BestEffort(value) for timestamp columns.

After sending the correct query to the API, it—or the API—will construct our vector search query using filters in WHERE clause strings. The returned values are formatted into strings as extra knowledge retrieved from the database. As the following code sample describes, this implementation is quite simple.

class ArXivKnowledgeBase:

def __init__(self, embedding: SentenceTransformer) -> None:

# This is our open knowledge base that contains default.ChatArXiv and wiki.Wikipedia

self.db = clickhouse_connect.get_client(

host='msc-950b9f1f.us-east-1.aws.myscale.com',

port=443,

username='chatdata',

password='myscale_rocks'

)

self.embedding: SentenceTransformer = INSTRUCTOR('hkunlp/instructor-xl')

self.table: str = 'default.ChatArXiv'

self.embedding_col = "vector"

self.must_have_cols: List[str] = ['id', 'abstract', 'authors', 'categories', 'comment', 'title', 'pubdate']

def __call__(self, subject: str, where_str: str = None, limit: int = 5) -> Tuple[str, int]:

q_emb = self.embedding.encode(subject).tolist()

q_emb_str = ",".join(map(str, q_emb))

if where_str:

where_str = f"WHERE {where_str}"

else:

where_str = ""

# Simply inject the query vector and where_str into the query

# And you can check it if you want

q_str = f"""

SELECT dist, {','.join(self.must_have_cols)}

FROM {self.table}

{where_str}

ORDER BY distance({self.embedding_col}, [{q_emb_str}])

AS dist ASC

LIMIT {limit}

"""

docs = [r for r in self.db.query(q_str).named_results()]

return '\n'.join([str(d) for d in docs]), len(docs)

# Conclusion

GPTs are indeed a significant improvement to OpenAI’s developer interface. Engineers don’t have to write too much code to build their chatbots, and tools can now be self-contained with prompts. We think it is beautiful to create an eco-system for GPTs. On the other hand, it will also encourage the open-source community to rethink existing ways to combine LLMs and tools.

We are very excited to dive into this new challenge, and as always, we are looking for new approaches to integrate vector databases—like MyScale—with LLMs. We firmly believe that bringing an external knowledge base—stored in an external database—will improve your LLM's truthfulness and helpfulness.

Add MyScaleGPT (opens new window) to your account now. Or join us on Discord (opens new window) or Twitter (opens new window) to start a deep, meaningful discussion on LLM and Database integration today.